How to check the factual accuracy of articles through AI tools?

AI tools can automate initial fact-checking stages by leveraging natural language processing and machine learning to identify potentially inaccurate claims. This approach offers scalable assistance for human fact-checkers but currently functions best as a supplementary verification layer rather than a definitive solution.

Key principles involve comparing textual claims against trusted, structured knowledge bases (e.g., scientific databases, verified entity repositories) and analyzing patterns indicating misinformation, such as linguistic inconsistency or anomaly detection. Successful application requires high-quality, relevant training data representing diverse factual domains. Crucially, AI tools depend entirely on the veracity of their underlying data sources and sophisticated algorithm design; they cannot independently "know" truth without reliable reference points. Human oversight remains essential for nuanced interpretation and final judgement.

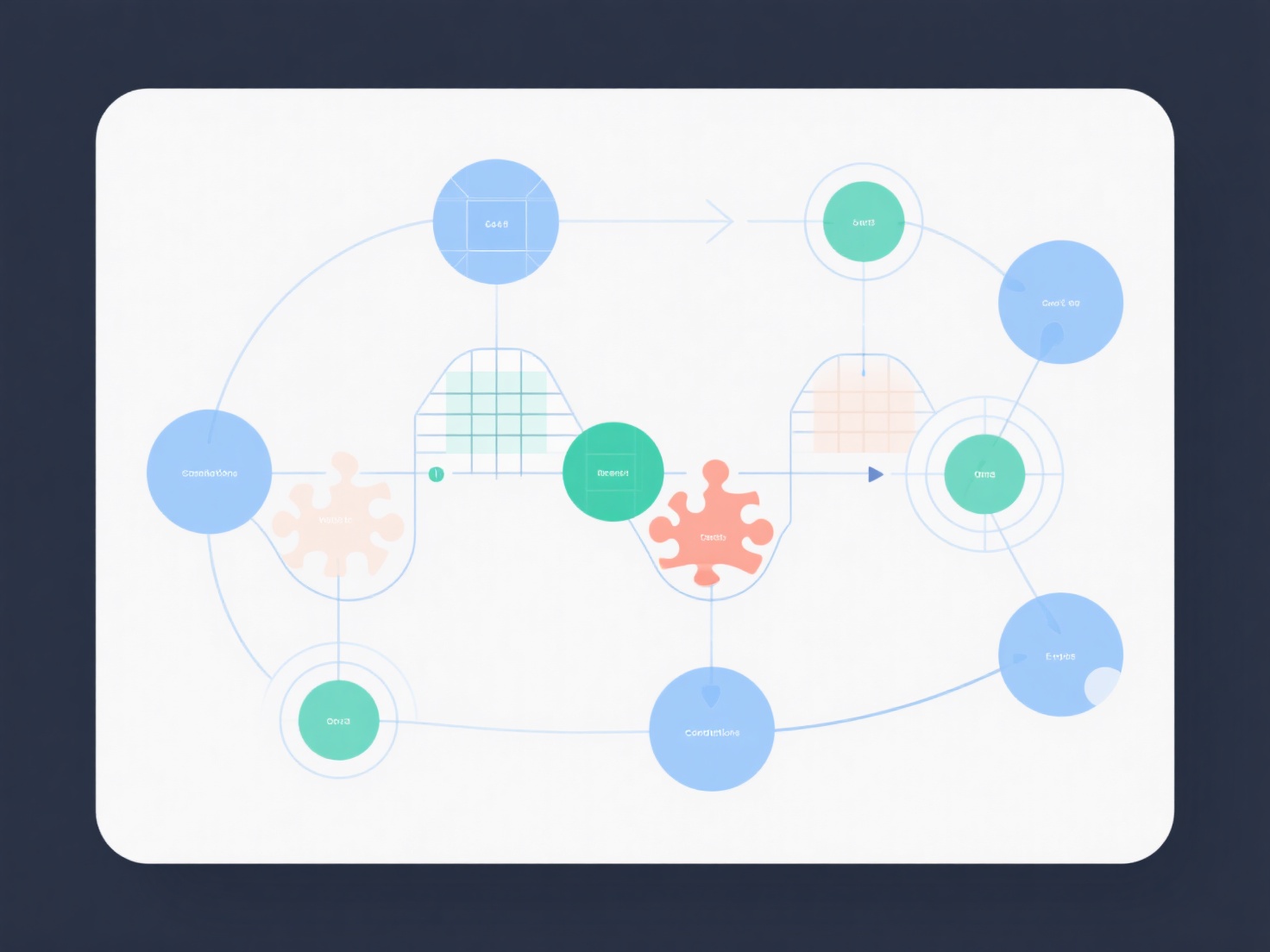

Implementation involves three core steps: 1) Preprocessing the text to extract factual claims using entity recognition and relationship extraction. 2) Cross-referencing each claim against curated databases or reputable web sources, often measuring semantic similarity. 3) Assigning confidence scores to flagged inconsistencies for reviewer triage. Typical applications include expediting verification for journalists and combating misinformation on digital platforms, significantly enhancing efficiency when integrated into editorial workflows.